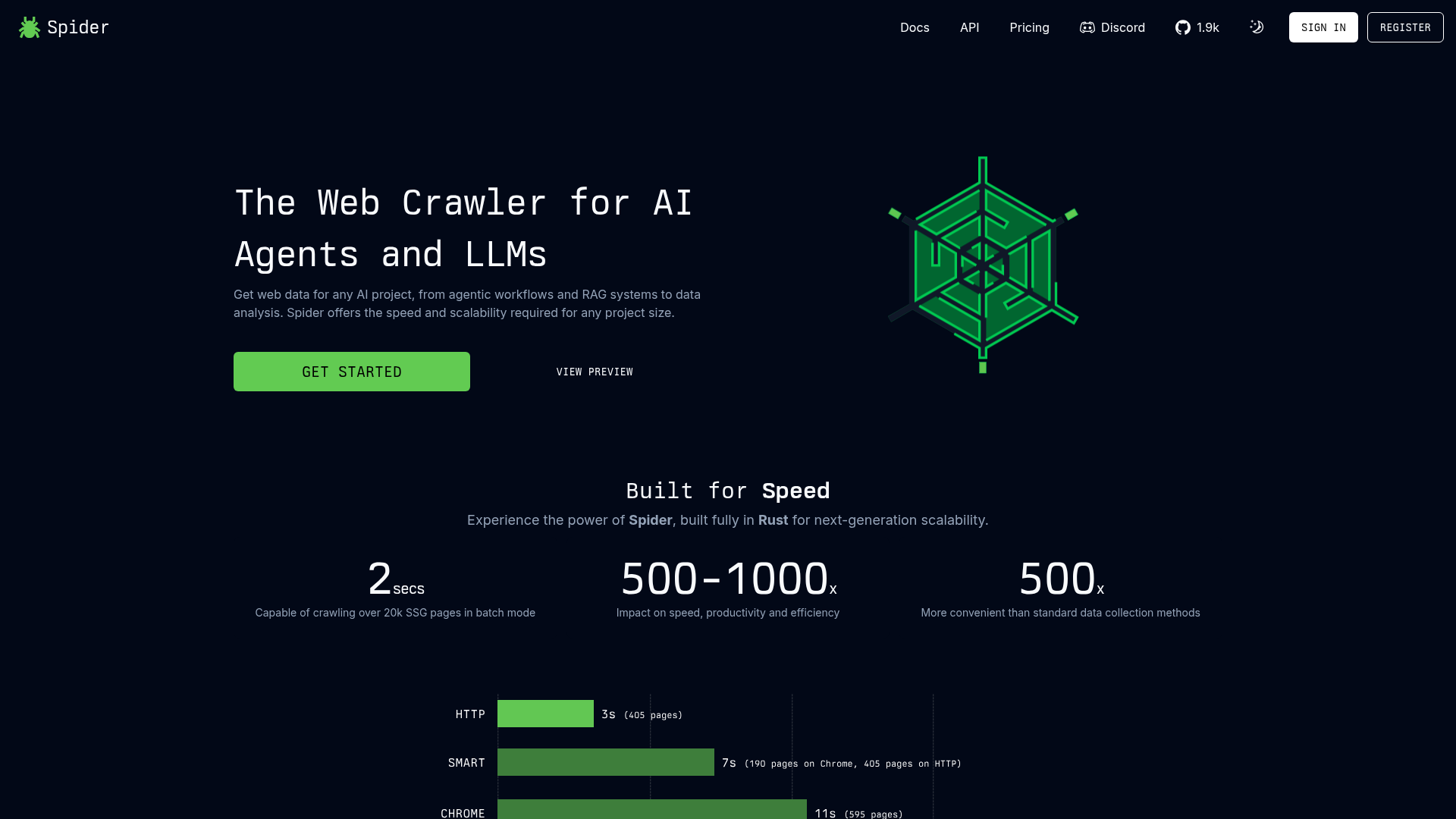

Spider

Web Crawler for AI and LLMs

Spider is a high-performance web crawler designed specifically for AI projects and large language models (LLMs). Built using Rust, it offers unparalleled speed and scalability, capable of handling thousands of pages in seconds. It integrates seamlessly with major AI platforms, enhancing data collection and processing workflows. Ideal for developers needing rapid, large-scale data extraction for AI training or operational deployment.